The past few months have been transformative for the Gemma family of open AI models. First came Gemma 3 and Gemma 3 QAT, delivering state-of-the-art performance across cloud and desktop accelerators. Soon after, Gemma 3n arrived — a mobile-first architecture designed to bring powerful, real-time multimodal AI directly to edge devices. With developer adoption skyrocketing, the so-called “Gemmaverse” has now surpassed 200 million downloads, reflecting the community’s excitement and momentum.

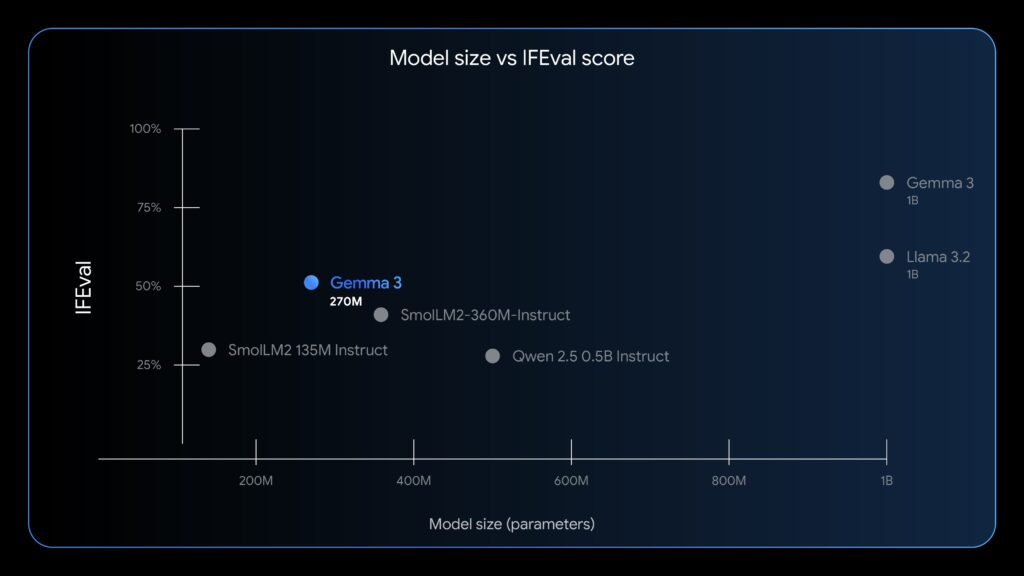

Now, Google is expanding the lineup with Gemma 3 270M — a compact 270-million-parameter model crafted for task-specific fine-tuning. Despite its small size, it comes pre-trained with strong instruction-following and text structuring capabilities, making it an efficient, developer-friendly tool for building practical AI applications.

Core Capabilities of Gemma 3 270M

Compact and Capable Architecture

At the heart of Gemma 3 270M is a carefully balanced design with 270 million parameters:

- 170 million embedding parameters (thanks to a large 256k-token vocabulary)

- 100 million transformer block parameters

This expansive vocabulary allows the model to handle rare and domain-specific tokens, making it an excellent base model for fine-tuning across industries and languages.

Extreme Energy Efficiency

Efficiency is one of Gemma 3 270M’s biggest advantages. In internal tests on the Pixel 9 Pro SoC, the INT4-quantized version consumed just 0.75% of battery for 25 conversations — making it the most power-efficient Gemma model to date.

Instruction Following

Alongside the pre-trained checkpoint, Google has released an instruction-tuned version of the model. While it’s not designed for highly complex, conversational AI, it performs strongly on general instruction-following tasks right out of the box.

Production-Ready Quantization

Quantization-Aware Trained (QAT) checkpoints are available, enabling INT4 precision with minimal performance trade-offs. This makes the model production-ready for resource-constrained devices like smartphones and IoT hardware.

The Right Tool for the Job

In AI, as in engineering, efficiency matters more than brute force. You wouldn’t use a sledgehammer to hang a picture frame — and you don’t need a billion-parameter model to solve every problem.

Gemma 3 270M embodies this principle. It’s a lean foundation model that follows instructions effectively out of the box, but its true strength emerges through fine-tuning. With specialization, it can handle tasks like text classification, data extraction, and semantic search with remarkable accuracy, speed, and cost-effectiveness.

By starting with a compact, capable model, developers can build production-ready systems that are faster, more affordable, and easier to deploy at scale.

A Real-World Blueprint for Success

The value of this approach has already been proven. For example, Adaptive ML partnered with SK Telecom to solve nuanced, multilingual content moderation challenges. Instead of relying on a massive, general-purpose system, they fine-tuned a Gemma 3 4B model.

The results? The specialized Gemma model not only matched but outperformed much larger proprietary models on its specific tasks — all while being more efficient and cost-effective.

With Gemma 3 270M, developers can take this strategy even further: building fleets of compact, specialized models, each fine-tuned for a single task, from enterprise-level moderation to lightweight creative apps.

One example? A Bedtime Story Generator web app that showcases how compact models can also power engaging consumer-facing experiences.

When to Choose Gemma 3 270M

Gemma 3 270M inherits the advanced architecture and robust pre-training of the Gemma 3 family, making it a strong foundation for building custom AI applications. But where does it really shine? Here are the scenarios where Gemma 3 270M is the perfect fit:

High-Volume, Well-Defined Tasks

Great for sentiment analysis, entity extraction, query routing, unstructured-to-structured text processing, creative writing, and compliance checks.

Speed and Cost Efficiency

If every millisecond and micro-cent matters, this compact model drastically reduces inference costs in production. A fine-tuned 270M model can run on lightweight infrastructure or even directly on-device, delivering blazing-fast responses.

Rapid Iteration and Deployment

Its small size makes it easy to fine-tune quickly. Developers can test multiple configurations and find the optimal setup in hours, not days.

Privacy by Design

Since the model can run entirely on-device, applications can process sensitive data without relying on the cloud — a major win for privacy-conscious industries.

Fleets of Specialized Models

Because of its efficiency, you can deploy multiple specialized task models without breaking your budget — each one fine-tuned to excel at a specific job.

Get Started with Fine-Tuning

Google has made it simple to customize Gemma 3 270M with familiar tools and platforms:

- Download the model → Available on Hugging Face, Ollama, Kaggle, LM Studio, and Docker (both pretrained and instruction-tuned versions).

- Try the model → Experiment on Vertex AI or with inference tools like llama.cpp, Gemma.cpp, LiteRT, Keras, and MLX.

- Fine-tune the model → Use frameworks like Hugging Face, UnSloth, or JAX to adapt Gemma 3 270M to your specific needs.

- Deploy your solution → Roll out your fine-tuned model anywhere — from your local environment to Google Cloud Run.

The Gemmaverse Vision

The Gemmaverse is built on the idea that innovation doesn’t always require massive models. With Gemma 3 270M, Google is empowering developers to build smarter, faster, and more efficient AI solutions tailored to specific use cases.

We’re excited to see the specialized models and real-world applications the community will create with this compact but powerful tool.